One morning I opened our referrals dashboard and found something I didn’t expect: Chat interfaces were already out‑pulling social for qualified clicks. That moment sent me into a year of experiments across ChatGPT, Gemini, Perplexity, Copilot and Google’s AI Overviews, this is the long walk through what actually moves the needle, why it works, where it breaks, and how I’d run AEO for a startup, a scale‑up and an established brand.

TL:DR

- AEO is search with new surfaces, new weighting and bigger tail, not a new religion. You’re still earning trust signals and relevance; you’re just optimising to be named and cited in answers rather than to own a single blue link. The head rewards brands mentioned across many high‑trust citations; the tail is far larger and more conversational than classic SEO.

- Off‑site citations are now growth assets. Reddit (with declared affiliation), YouTube/Vimeo for “boring money” B2B queries, and tier‑1 affiliates (Dotdash Meredith brands like Investopedia, Allrecipes, PEOPLE) frequently feed answer engines.

- Quality holds up: LLM‑sourced traffic can convert ~6× better than classic Google organic in B2B, and I’ve seen brands attribute ~8% of sign‑ups to LLM answers already. Treat AEO as a high‑intent, mid‑funnel channel and measure beyond last‑click.

- Crawl ≠ training: control them separately. If you want to show up in ChatGPT search but not be used for model training, allow OAI‑SearchBot, disallow GPTBot, and decide how you want to handle PerplexityBot. Policies are evolving, review quarterly.

- The impact picture is mixed, by vertical. Google says AI Overviews drive “higher‑quality clicks,” while multiple studies and fresh lawsuits allege reduced referrals to publishers; in some months Google has visibly dialled AIO exposure up or down. Instrument incrementality, don’t rely on narratives.

- Operate by topics, not keywords. Architect one page per topic that answers hundreds of follow‑ups; pair it with off‑site citation work and help‑centre content in a subdirectory (not a siloed subdomain) plus QAPage/DiscussionForumPosting schema.

- Build an “Answer SOV” tracker and run experiments. Measure how often you’re named across engines, prompt variants and repeated runs; intervene on half your question set and hold out the rest. Reproducibility trumps folklore.

Last January we noticed something odd: branded direct and branded search were up, but the corresponding referral trail was thin. Prospects like Bina school later told us they’d first seen us inside an answer and then typed our name into a new tab. Around the same time, more answer surfaces became explicitly clickable, cards, carousels, in‑text links, particularly in Google’s AI Overviews and product‑style modules. That was the inflection point where AEO stopped being a curiosity and started behaving like a real channel.

If you strip away the headlines, AEO is simple: models plus retrieval summarise a set of sources and attribute them. The strategic shift is that the unit of competition changes. On head queries (e.g., “best website builder”), you don’t “win” because your URL ranks #1, you win because you’re named in the most and best citations the engine trusts. On the tail, chat explodes the space of questions, multi‑clause, context‑rich and often unseen in classic search logs. Early‑stage products can win tomorrow if they’re named across Reddit threads, YouTube videos and a couple of affiliate round‑ups.

Open your analytics and customer survey responses. Add a “Where did you first hear about us?” question with [ChatGPT, Gemini, Perplexity, Google AI Overviews, Reddit, YouTube, Other]. Expect answer exposure to manifest as branded clicks, not neat referrals, so measure declared attribution and build an Answer Share‑of‑Voice (A‑SOV) baseline immediately.

The mental model I use to reason about AEO

1) Two pillars: on‑site topic depth and off‑site citations

On‑site: build one page per topic (not keyword) and answer a few hundred likely follow‑ups: use cases, comparisons, integrations, pricing caveats, localisation, security, SLAs. Add FAQ/QAPage where appropriate, interlink with your docs/help centre, and keep help in a subdirectory so link equity and navigation aren’t siloed.

Off‑site: earn named mentions where engines harvest citations: Reddit (declare affiliation; be actually helpful), YouTube/Vimeo for B2B queries that few people film, and tier‑1 affiliates (Dotdash Meredith brands like Investopedia, PEOPLE, Allrecipes, The Spruce, etc.).

2) Head vs tail

- Head: the answer often privileges the brand mentioned across the most citations, not the top organic rank.

- Tail: chat’s tail is bigger and more specific. This is where early‑stage teams win first—by answering things nobody has answered properly.

3) Engines disagree-plan for diversity

In my tracking, citations used by Perplexity often correlate more with Google SERPs than ChatGPT’s do, so outreach targets differ by engine. Don’t assume one basket. (Independent analyses echo this pattern.)

Pick 15–20 money topics, map ~300–500 questions each, and split your work: 60% on off‑site citation earning, 40% on topic‑page depth and help‑centre tail. Track A‑SOV by engine and by topic.

Context & Stakes

Search behaviour is shifting from “ten blue links” to answers with citations across ChatGPT, Gemini, Perplexity, Bing Copilot and Google’s AI Overviews. The mechanisms are familiar: models + retrieval (RAG) summarise a set of sources and render one or more clickable cards/links. The strategic difference:

- Head terms: winners are those mentioned most across citations, not simply the site ranking #1 organically.

- Tail queries: the long tail is much larger in chat, buyers ask multi‑clause, context‑rich questions that never existed as classic queries.

This isn’t the end of SEO; it’s SEO with more surfaces, different weighting, and faster payback for off‑site citations. Google claims AI Overviews send “higher‑quality clicks,” while publishers increasingly contest traffic impact; you should plan for both realities by measuring incrementality and building a citations moat.

So Is AI killing clicks?

Google’s Search lead says AI Overviews drive “higher‑quality clicks,” and Google’s own blog emphasises that they still send “billions of clicks to the web.” Meanwhile, major publishers have publicly alleged and even sued over lost referrals; independent datasets have shown Google dialling AIO exposure up and down over 2024–2025. The sensible path is to assume mixed effects by vertical, build your own controls, and manage risk, not to bet your strategy on headlines.

Treat AEO as an additive channel. Build geo‑holds or question‑set holds, collect declared source on signup (“How did you first hear about us?”), and attribute presence in answers, not just traffic.

The Strategic Bet

Thesis: If we deliberately earn citations across the sources answer engines trust, and structure our own pages to answer clusters of questions, we can dominate A‑SOV on valuable topics, months before typical SEO authority would compound.

Why it’s non‑obvious: most teams still optimise for a single page to rank for a single head keyword. In AEO, the unit is a topic (hundreds of questions) and the currency is a citation (your brand named by third‑party sources).

Founder lens: early‑stage companies can win this week by earning citations on Reddit, YouTube, and authoritative affiliates, even with low domain authority.

Move budget from generic blog output to citation acquisition + topic pages that answer follow‑ups exhaustively.

Go‑to‑Market Architecture

ICP / JTBD.

- Startups: founders and PMs seeking “best X for Y” answers; buying motion often starts inside an AI chat window.

- Scale‑ups: procurement & technical evaluators who ask detailed follow‑ups (features, integrations, pricing tiers, compliance).

- Enterprises: RFP‑style questions; security, localisation, data residency; “does this integrate with our stack?”

Positioning & messaging: frame your offer around evaluation‑stage questions. Embed Information Gain (tell buyers something net‑new that others don’t) to be preferred as a citation source. (Google patents around information gain suggest uniqueness may be weighted; regardless of ranking specifics, it’s the right product marketing move.)

Channel → funnel mapping:

- On‑site (topic pages, docs, help centre): capture tail and be eligible for citation.

- Off‑site (Reddit, YouTube/Vimeo, tier‑1 affiliates like Good Housekeeping / Investopedia network via Dotdash Meredith; review sites; analysts): earn citations at scale.

Define 10–20 topics that map to revenue and build both on‑site depth and off‑site citations for each.

Acquisition Playbook

1) On‑site: Topic pages that answer clusters of questions

- Build one page per topic (e.g., “No‑code website builder”) that covers: use cases, alternatives, feature comparisons, integrations, limits, pricing scenarios, localisation, SLAs. Each page should answer hundreds of likely follow‑ups via structured sections and internal anchors.

- Add FAQ/QAPage markup where appropriate; link across sibling questions; cross‑link from docs/help centre.

- Prefer subdirectory structures for help/learn content to consolidate signals (Google says subdomain vs subfolder is broadly neutral; in practice, ops simplicity + consolidated internal linking often wins).

2) Off‑site: Earn citations where LLMs harvest

- Reddit: participate authentically. Disclose your role, provide useful, specific answers; you need dozens, not thousands. Spammy multi‑account tactics tend to get removed/banned.

- YouTube/Vimeo: prioritise “boring money” B2B terms with low video supply (e.g., AI‑enabled payment reconciliation for ERPs). These rank in LLM citations and face little competition.

- Affiliates / Media: pay‑to‑play can be pragmatic for certain categories (credit cards, consumer tech) via Dotdash Meredith and peers; you’re effectively renting citation real estate that LLMs trust.

- Review & Q&A ecosystems: G2/Capterra, Stack Overflow, Quora, capture named mentions and answer comparisons.

- Community & forums: seed community Q&A; mark up discussion content with DiscussionForumPosting/QAPage schema to surface long‑tail answers.

3) Surfaces differ, so does strategy

- B2B: fewer direct clicks in some answer surfaces → measure with post‑conversion “How did you hear about us?” plus A‑SOV tracking, not just referral logs.

- Commerce/local: shoppable cards & local modules now appear; treat as performance media (schema, reviews, stock, price accuracy).

Allocate 50–70% of AEO effort to off‑site citation acquisition; the remainder to on‑site topic depth and help‑centre tail.

Creative System

Hooks that win citations

- Specificity hook: “How to migrate 50k SKUs to headless in 48 hours (with rollback).”

- Comparison hook: “X vs Y for EU SaaS, GDPR, data residency, and SSO you’ll actually use.”

- Proof hook: “We cut Vendor Bills by 19% via invoice dedupe, here’s the SQL.”

UGC vs polished: prioritise UGC‑style explainer videos and authentic Reddit posts for speed and trust; deploy polished content for affiliate placements and sales enablement.

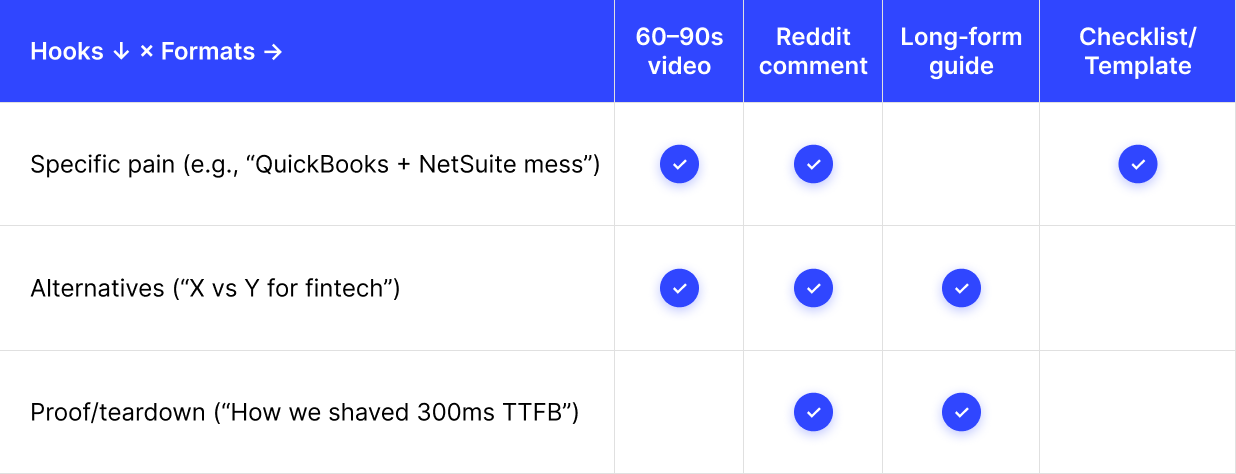

Creative testing matrix (simplified)

- Specific pain (e.g., “QuickBooks + NetSuite mess”)

- 60-90s video

- Reddit comment

- Checklist / Template

- Alternatives (“X vs Y for fintech”)

- 60-90s video

- Reddit comment

- Long-form guide

- Proof/teardown (“How we shaved 300ms TTFB”)

- Reddit comment

- Long-form guide

Ship two UGC videos + five declared Reddit answers per topic per month; measure A‑SOV change and answer inclusion.

Conversion Engine

- Landing: anchor links to sub‑questions; diagnostic CTAs (“Which website builder fits your constraints?”) to pull visitors deeper.

- Social proof: privilege third‑party quotes and “As seen in [citation domain]” callouts, because the very surfaces that cite you are the ones users now trust.

- Pricing/packaging: clarify plan limits in the same language used in chat surfaces (e.g., “Seats”, “Docs/month”, “Integrations”).

- Onboarding: answer the most common chat‑era objections with first‑run tooltips and a “Compare Providers” in‑product panel.

Recycle people’s chat‑era questions into your landing & onboarding; reduce bounce from answer clicks.

Retention & Monetisation

- Activation: ship “guided outcomes” for the top three chat‑queried use cases; instrument time‑to‑first‑value by use case.

- Lifecycle/CRM: trigger emails based on the question theme that originally brought them (e.g., “security & compliance” → send SOC2 mapping guide).

- Expansion: use in‑product Q&A widgets seeded with your own help‑centre articles and community answers.

Bind the question to the lifecycle.

Growth Loops & Flywheels

- Content→Citation loop: original studies (benchmarks, teardown data) → picked up by affiliates/press/Reddit → cited by LLMs → you screenshot the citation and embed it on‑site → more citations.

- Help‑centre tail loop: support tickets & sales calls → new Q&A articles in subdirectory → long‑tail answers in chat → lower ticket volume and more qualified trial sign‑ups.

Prioritise loops that manufacture third‑party mentions.

Data & Measurement

Event schema:

view_answer(engine, prompt, run_id, rank_in_answer, cited_as_brand:boolean) via your tracker’s export.click_from_answer(engine, referrer_url) when available.session_landing_context(topic_id, question_id).post‑conversion_survey(heard_about_us = [ChatGPT | Perplexity | Gemini | Google AIO | Reddit | YouTube | Other]).

Attribution:

Run each question 3–5 times, vary prompts, and sample multiple engines; compute % of runs in which you’re cited, weighted by placement. (Think of A‑SOV as rank‑free market share inside answers.)

- A‑SOV: % of runs/variants where your brand appears, weighted by rank/placement and engine usage.

- Incrementality: run geo‑holds or question‑set holds, freeze work on a control set; intervene on a test set.

- When last‑touch lies: in B2B, expect branded search or “direct” to rise after answer exposure; collect declared attribution.

Tooling: BrightEdge AI Catalyst, SISTRIX, seoClarity, SE Ranking’s AI visibility, plus vendor lists that index LLM surfaces.

Before you do anything else, baseline A‑SOV for 200–500 questions per topic and engine. Then test interventions by question‑set holds.

Track presence in answers, not just traffic; use controls to isolate effect.

Unit Economics & Funnel Math

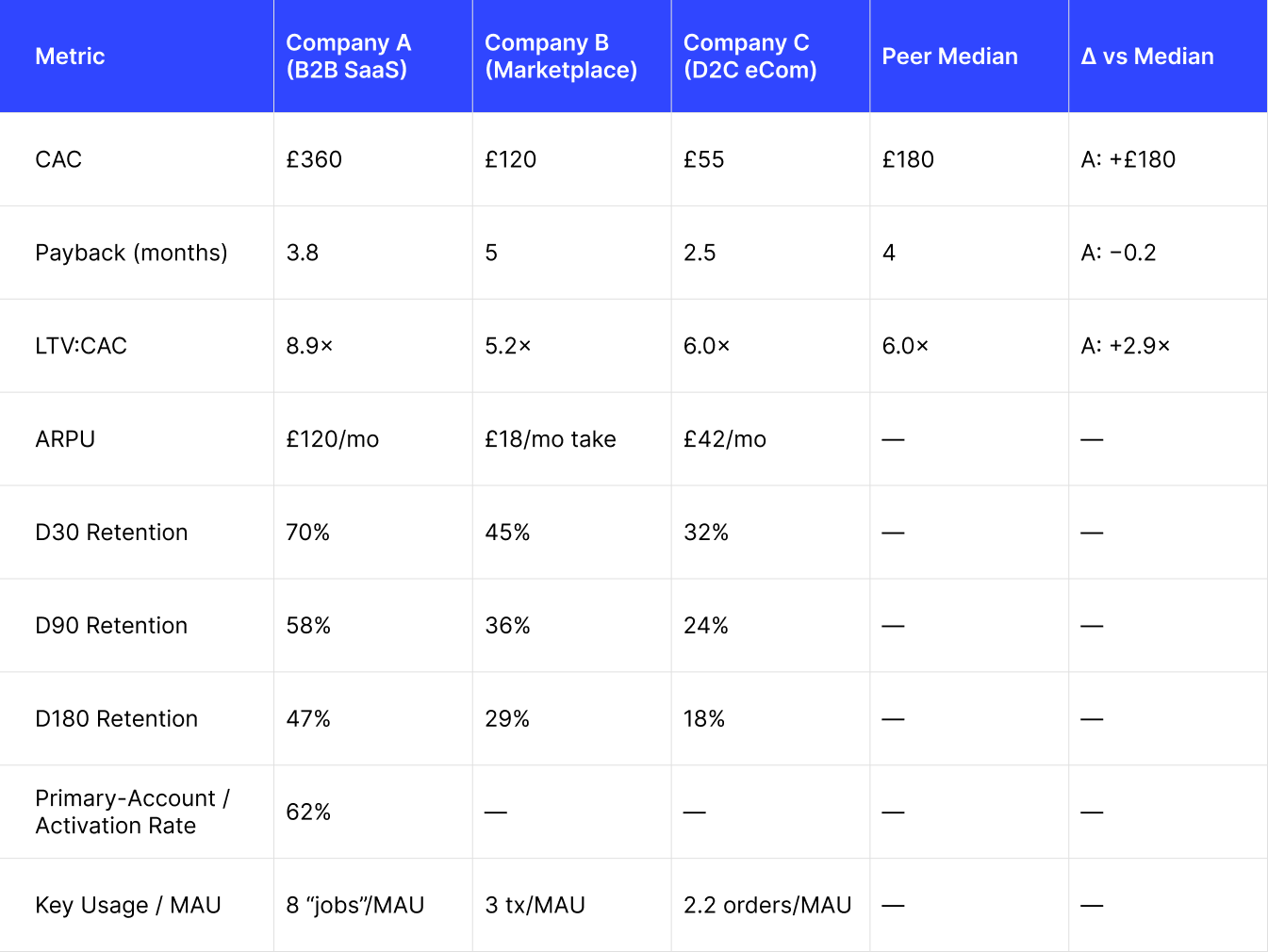

Definitions

- CAC = Sales & Marketing spend / New customers.

- Payback (months) = CAC / Monthly contribution margin per active.

- LTV (simple) = ARPU × Gross Margin ÷ Monthly churn.

Worked example (illustrative):

Assumptions (B2B SaaS):

- Classic Google organic trial→paid conversion = 2.0%; AEO answer click trial→paid = 12.0% (≈6× uplift, aligned with the Webflow example).

- ARPU = £120/month; Gross Margin = 80%; Monthly churn = 3%.

- CAC from AEO programme = £360 per new customer (content + off‑site + tracking).

- CAC from classic SEO content = £480 per new customer.

Compute LTV (simple):

LTV = 120 × 0.8 ÷ 0.03 = £3,200.

Payback – AEO:

Monthly contrib margin per active = 120 × 0.8 = £96.

Payback = 360 ÷ 96 = 3.75 months.

Payback – classic SEO:

Payback = 480 ÷ 96 = 5.0 months.

If your AEO conversion rate is even 3× classic organic, AEO often dominates on payback.

Peer Benchmarks (illustrative)

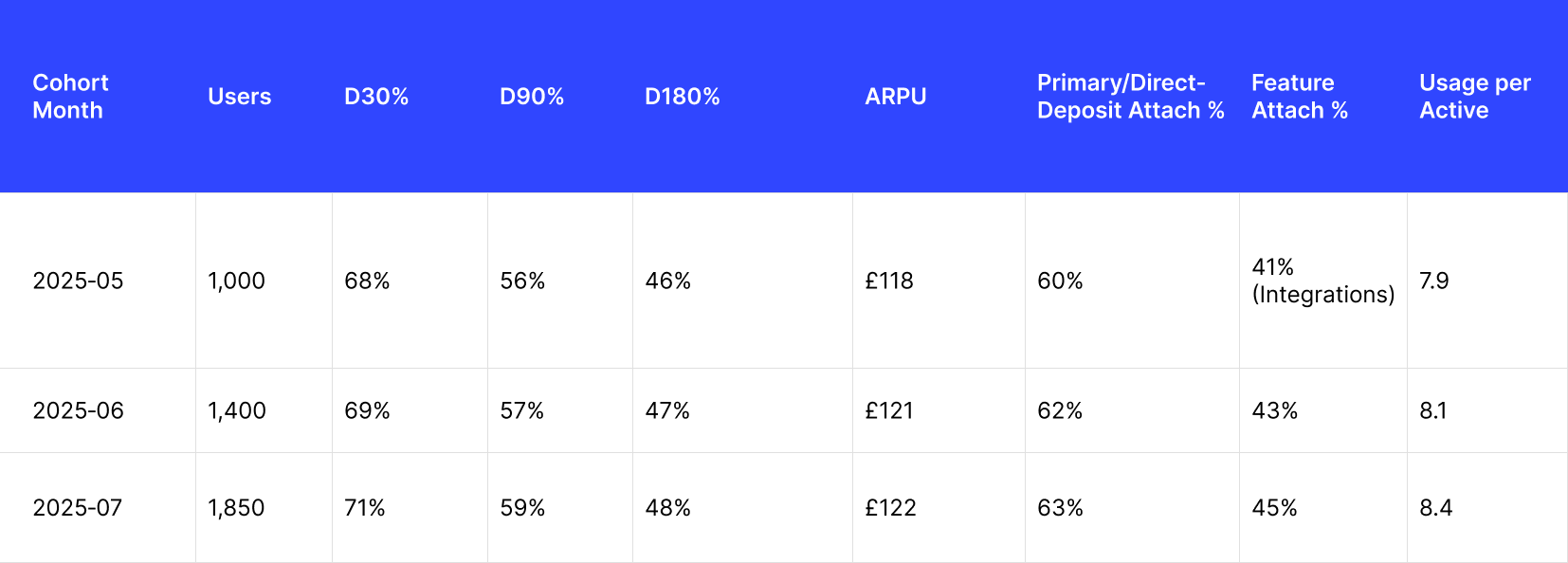

Cohorts & Attach Rates (illustrative)

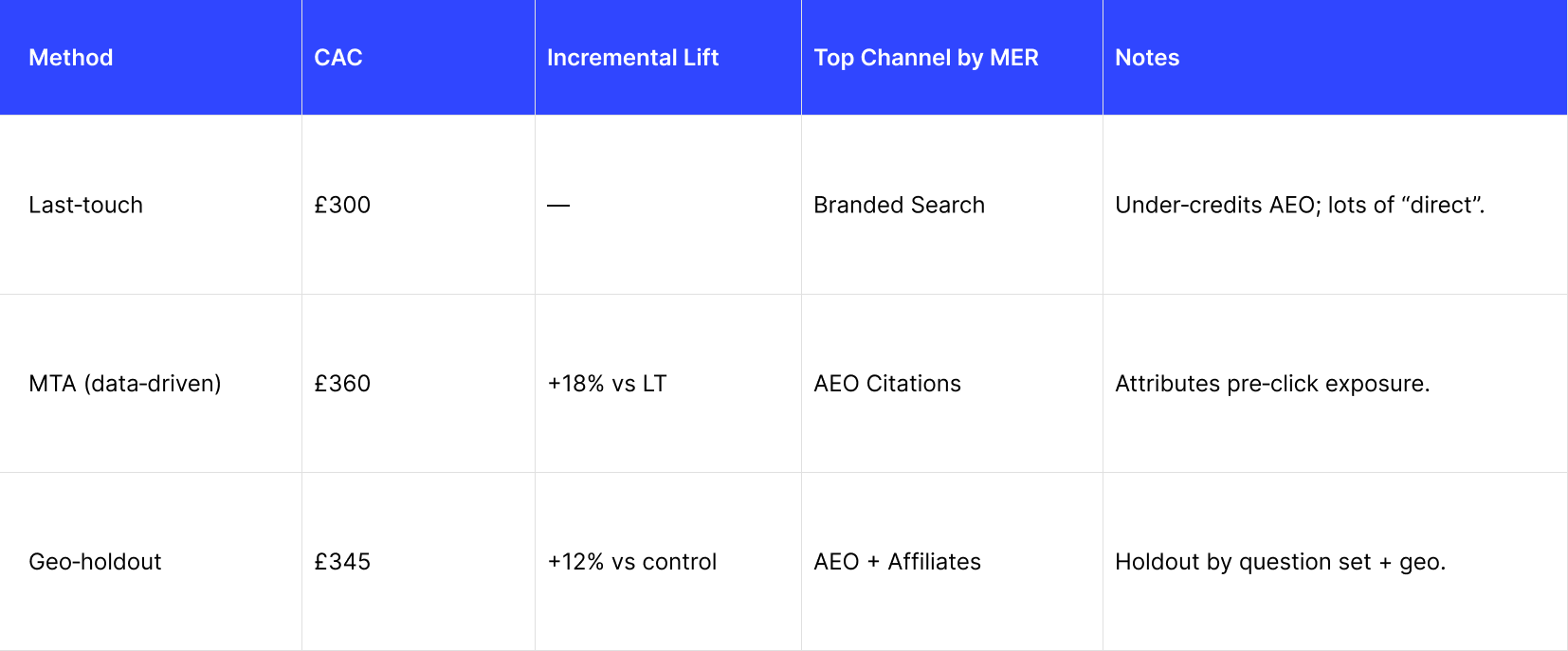

Attribution Snapshot (illustrative)

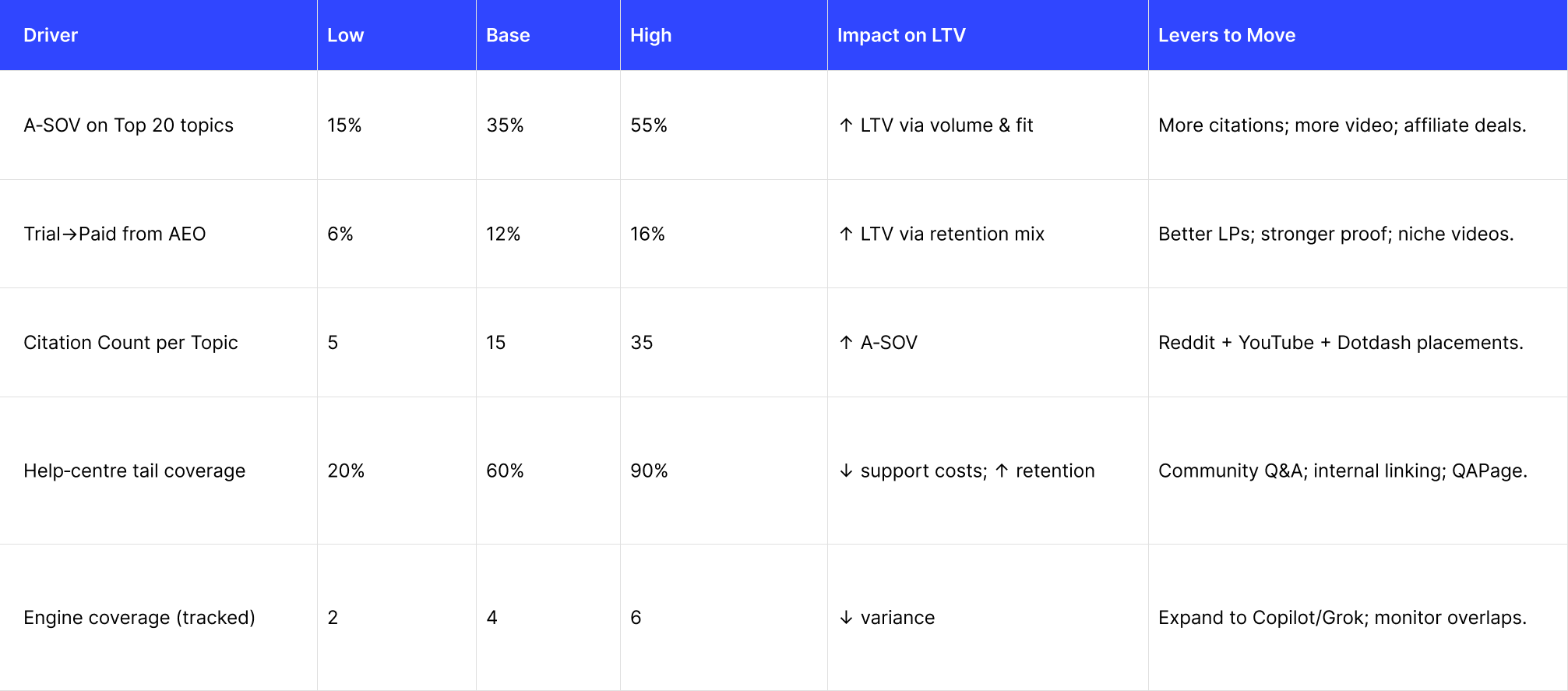

Scenario & Sensitivity

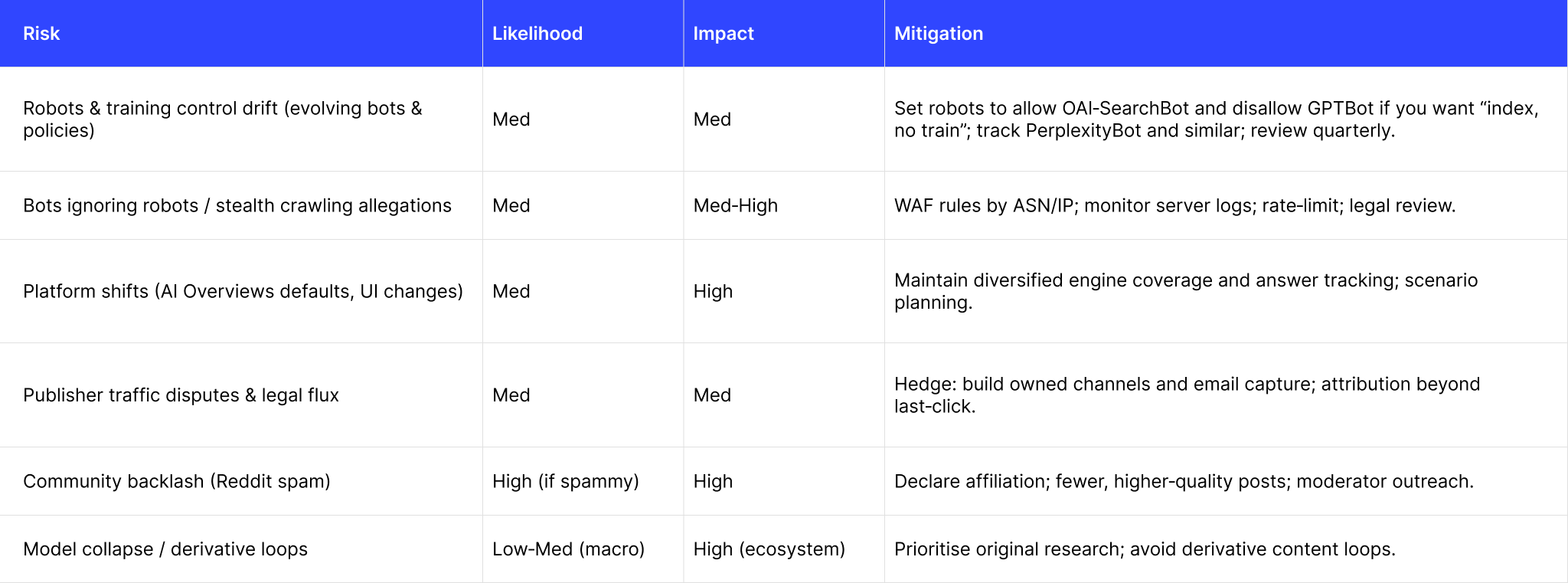

Regulatory & Risk Register

Investor Narrative Map

Translate AEO work into S‑1 style KPIs: acquisition efficiency (CAC, payback), durable cohorts (D90/D180), contribution margin, and marketing efficiency ratio (MER). Tie A‑SOV moves on core topics to pipeline created and to higher trial→paid rates versus classic SEO.

Position AEO as an efficiency engine with faster learning loops than SEO and a clearer path to payback.

Ops & Teaming

- Roles: SEO lead (topics, on‑site), AEO citations lead (Reddit/YouTube/affiliates), Analyst (tracking, A‑SOV, experiments), PMM (Information Gain & proofs), Developer advocate (for docs/help‑centre tail).

- Cadence: weekly growth review (template at end); monthly topic retro; quarterly engine coverage and robots policy audit.

- Agency vs in‑house: in‑house for Reddit/community & product nuance; agency for scalable research, tracking, affiliate relationships.

make citations someone’s day job.

At Growthcurve we simplify the whole process with a dedicated AEO service, book a Discovery Call to learn more.

AEO in the wild (what actually moves the needle)

Reddit, the right way

The obvious (wrong) tactic is sock‑puppet spam. It gets removed, accounts get banned, and you burn trust. The tactic that does work is slower and more durable: declare who you are, say where you work, and post a specific, useful answer, on threads that already rank or get cited. You need dozens of good posts, not thousands.

Video for “boring money” topics

There are few videos for B2B mouthfuls like “AI‑enabled payment reconciliation for ERPs”. That scarcity lets a clear, 3–6 minute explainer earn citations quickly. Our hit‑rate was highest when we showed the actual UI and included downloadable config.

Affiliates and review networks

In categories like cards, consumer tech and home, Dotdash Meredith properties (Investopedia, PEOPLE, Allrecipes, The Spruce, etc.) are highly represented in both SERPs and answer citations. You’re effectively renting citation real estate.

Help‑centre tail

This is under‑invested and decisively useful in chat, where follow‑ups probe “does it do X?” Move help from a subdomain to a subdirectory, interlink heavily, and publish the obscure tails from support/sales calls (the ones no competitor has written up). Use DiscussionForumPosting if you have a community.

If you can only do two things this quarter, do declared Reddit (quality over scale) and help‑centre tail in a subdirectory with schema. Everything else compounds on top.

So:

- Don’t make this academic. We tested AEO programmes where half the “best practices” we’d read didn’t replicate; the humble thing is to test, measure, and keep only what earns answer share‑of‑voice.

- Community beats tricks. Reddit works when you’re useful and honest; everything else tends to get removed.

- Help‑centre content, often neglected, wins outsized AEO because it answers the exact follow‑ups chat users ask. Move it to a subdirectory, cross‑link heavily, and open the long tail to your community.

The plumbing you need to control (crawl, training, and robots)

You likely want to be discoverable in answer engines without donating your content for training. Today’s practical stance for many brands:

- Allow:

OAI-SearchBot(index for ChatGPT search/surfacing) - Disallow:

GPTBot(training) - Decide:

PerplexityBot(many allow; some don’t) - Audit updates quarterly; policies and agent strings evolve.

Google’s AI Overviews are also moving targets. Third‑party trackers (BrightEdge, SISTRIX, seoClarity, SE Ranking) now record whether AIO appears and which sources are shown; some archive the content of the AIO box itself. Use them to monitor volatility and your A‑SOV footprint.

Add an internal robots policy page your team can update without an engineering sprint. Reconfirm your stance on OAI‑SearchBot/GPTBot/PerplexityBot every quarter. Log agent hits.

practical snippets you can lift

Robots.txt stance many brands adopt

User-agent: GPTBot Disallow: /

User-agent: OAI-SearchBot Allow: /

User-agent: PerplexityBot Allow: /

Review quarterly; confirm against official agent docs and your legal posture.

Schema to unlock tail eligibility

- QAPage for true Q&A pages.

- DiscussionForumPosting for community threads.

Trackers to quantify A‑SOV / AIO volatility

- BrightEdge AI Catalyst; SISTRIX AIO filters; seoClarity AIO; SE Ranking AI Visibility (also tracks ChatGPT/Gemini/Copilot).

Step By Step AEO Guide

A. Weeks 0–2 — Map topics & baselines

- Pull money keywords (your PPC, competitors’ PPC), convert to questions, cluster into ~20 topics.

- Stand up A‑SOV tracking across ChatGPT, Gemini, Perplexity, Copilot, and Google AIO for 200–500 questions; include prompt variants; run each 3–5 times.

- Identify top citations per topic (affiliates, Reddit threads, videos) and your current presence.

B. Weeks 3–8 — Build & earn

4. Ship one topic page/week answering 100+ sub‑questions; add FAQ/QAPage markup; cross‑link with docs/help.

5. Reddit plan: 2–3 declared, useful answers per topic/week; one AMA or deep thread/month.

6. Video: 2 explainer videos/week targeting high‑LTV tail terms; publish to YouTube & Vimeo.

7. Affiliates: buy/earn 1–2 placements per priority topic (Dotdash Meredith et al.) where ethical and permitted.

8. Robots policy: if desired, allow OAI‑SearchBot (index) and disallow GPTBot (training). Add PerplexityBot rules. Monitor.

C. Weeks 9–12 — Measure & scale

9. Re‑measure A‑SOV; attribute movement to interventions vs controls.

10. Scale what moves the needle (more Reddit/video on winners; new affiliate category where under‑cited).

11. Add help‑centre tail: source from sales & support calls; publish weekly; open community Q&A.

Guardrails: avoid spam; never astroturf Reddit; fact‑check video claims; maintain legal review for affiliate language.

Experiments that pay their way

Hypothesis 1 (Reddit): Declared, high‑quality Reddit answers on threads that already appear as citations increase topic‑level A‑SOV by ≥10pp in 30 days.

- Unit: topic question sets

- Sample/power: ~120 questions per arm for 10pp MDE at 0.8 power (adjust with your tracker’s variance)

- Primary metric: Δ A‑SOV; Guardrails: mod removals <10%, sentiment ≥ baseline

- Stopping rule: 30 days or 80% power

- Decision: scale if Δ≥8pp and guardrails hold

Hypothesis 2 (Video): Shipping 2 explainer videos/mo for neglected B2B tails increases answer citations for those tails by ≥15% within 45 days.

Hypothesis 3 (Help‑centre): Subdomain→subdirectory migration plus QAPage/DiscussionForumPosting schema increases answer mentions and reduces ticket volume for those topics by ≥10% in 60 days.

30‑day learning agenda: Week 1 seeding (Reddit + video), Week 2 topic‑page shipments, Week 3 affiliate outreach, Week 4 readouts; controls untouched.

Key Metrics

- A‑SOV (Answer Share‑of‑Voice): % of runs/variants where we’re mentioned, by engine & topic.

- Citation density: avg. # of distinct third‑party sources that mention our brand per topic.

- Trial→Paid from AEO: conversion rate from answer clicks.

- Topic coverage: % of mapped sub‑questions answered on our pages/help centre.

Common Pitfalls & Anti‑Patterns

- Optimising for head ranks only → optimise for citations count/quality across sources.

- Spammy Reddit automation → gets removed/banned; use declared, helpful participation.

- Ignoring help‑centre tail → misses the questions chat users actually ask; move to subdirectory, cross‑link, QAPage.

- Assuming AI Overviews always kill clicks (or never do) → plan for variance by vertical; instrument incrementality.

- Blocking all AI bots → you may remove yourself from answer indices; if needed, allow search bots and disallow training bots.

What I’d Do Differently Next Time

- Start earlier with A‑SOV tracking to see platform differences (e.g., Perplexity often overlaps more with Google SERPs; ChatGPT’s overlap can be lower), and tune which citations to chase per engine.

- Treat affiliate & review networks as negotiated distribution, not an afterthought.

- Put Help Centre in the core content plan from day one; it’s where follow‑ups live.

AEO for different company stages

Startups (pre‑PMF → early PMF)

Skip classic SEO head terms. Go straight to off‑site citations (Reddit, YouTube, 1–2 affiliate placements) and long‑tail topic pages that answer neglected questions. You can be named in answers within days even with low domain authority.

Scale‑ups

Parallelise: add topic depth on‑site, measure A‑SOV across engines, and assign an owner to citations. Start in 15 topics, expand to 30–40.

Enterprises

Expect procurement‑style questions. Prioritise security/compliance content, localisation, and integration matrices; seed your answers into analyst and review networks that show up as citations.

Regardless of stage, carve out an explicit AEO citations lead (different muscle than SEO). Make citations someone’s day job.

The uncomfortable bit... AI content and the risk of model collapse

We ran our own checks on AI‑generated articles and saw the same thing many now report: purely auto‑generated content without a human in the loop doesn’t sustain. Engines are incentivised to avoid becoming “search for AI output” and have been tuning away derivative, low‑novelty content for years. The broader research community has also flagged a structural risk: model collapse, when models trained recursively on their own outputs lose the tails of the distribution, converging on bland point estimates. That’s the opposite of the “wisdom of the crowd” that good answers depend on.

Prioritise information gain, unique data, original analysis, and domain expertise. If your content would not teach a careful editor something new, it probably won’t earn citations in answers.